本节介绍 MVTec 的 HALCON 22.11 机器视觉软件库中包含的参数。

信息:

这些参数可直接在 mapp Vision HMI 应用程序中更改(参见配置视觉功能)。

信息:

决定 POWERLINK 帧长度的单个配置参数只能在 AS 软件的配置过程中更改(即循环更改)。因此,这些参数只能在运行时读取,并标识为常量。

UCHAR |

1 至 255 |

匹配结果的最大数量 |

|

R/W1 |

|

配置参数 |

|||||

UCHAR |

0 至 100 |

搜索匹配的系数。从 0(安全但缓慢)到 1(快速但可能 "忽略 "匹配),增量为 0.01。 默认值 = 0.9 |

|

R/W |

|

UCHAR |

0 至 2 |

决定是否允许搜索到的模型部分位于图像之外。 0 = 假 1 = 真 2 = 系统(默认值) |

|

R/W |

|

模型参数 |

|||||

UCHAR |

0 至 2 |

0: NCC 模型 1: 形状模型 2: 可变形形状模型 |

|

R |

|

|

|

|

|

|

|

整数 |

0 至 10 |

金字塔的最大层数。 默认值 = 0(自动) |

|

R/W2 |

|

双 |

-360 至 360 |

图案的最小旋转角度。 默认值 = -0.39 |

|

R/W2 |

|

双 |

0 至 360 |

角度范围的扩展。 默认值 = 0.79 |

|

R/W2 |

|

双倍 |

>0.0 至 ≤11.25 |

角度的增量(分辨率)。 默认值 = 0(自动) |

|

R/W2 |

|

字符串 |

右侧指定的字符串 |

用于匹配的度量类型。 "use_polarity"(默认值) "忽略全球极性 "忽略本地极性 |

|

R/W2 |

|

双 |

0 至 2.55 |

模型的最小缩放比例。 默认值:0.9 |

|

R/W2 |

|

双 |

0 至 2.55 |

模型的最大缩放比例。限制条件:ScaleMax ≥ ScaleMin 默认值:1.1 |

|

R/W2 |

|

双 |

0 至 0.2 |

0(自动) 0.001 - 0.200 |

|

R/W2 |

|

字符串 |

右侧指定的字符串 |

优化类型。 "自动"(默认值) 无 "低优化点 "点数减少中等 点数减少率高" "点数减少率低" "点数减少率中" "点数减少率高" |

|

R/W2 |

|

双 |

-1 至 255 |

模型对比度最小值、模型对比度最大值和模型最小尺寸的设置选项 "auto" if Min == -1 && Max == -1 && MinSize == -1 [ "auto_contrast_hyst",MinSize] 如果 Min == -1 && Max == -1 && MinSize ≥ 0 [ "auto_minsize",Min,Max] if MinSize == -1 && Min ≥ 0 && Max ≥ 0 && Min < High [ "auto_minsize",对比度] if MinSize == -1 && Min ≥ 0 && Max < 0 && Min > High : 对比度 := Min [ "auto_contrast",MinSize]如果 Min == -1 && Min > High(即 Max ≤ -2)&& MinSize ≥ 0 [ "auto_contrast", "auto_minsize"] 如果 Max == -2 && Min == -1 && MinSize == -1 [Min, Max, MinSize] 否则。 这意味着,如果 Min ≤ Max,则这两个参数描述的是有效的滞后参数;因此,"滞后 "会被选中。如果最小值 > 最大值,则这两个参数不是有效的磁滞参数。因此,"对比度 "被心智选中,最小值包含对比度值。最大值总是 ≤-1。 |

|

R/W |

|

双 |

-2 至 255 |

|

R/W |

||

双 |

-1至2^31-1 |

|

R/W |

||

整数 |

0 至 10 |

金字塔的最大层数。 默认值 = 0(使用 ModulNumLevels 的值) |

|

R/W2 |

|

双 |

-360 至 360 |

图案最小的旋转角度。默认值 = -0.39 |

|

R/W2 |

|

双 |

0 至 360 |

角度范围的扩展。默认值 = 0.79 |

|

R/W2 |

|

字符串 |

对于 "形状" |

如果不等于 "none",子像素精度。 "无" 插值 "最小二乘"(默认值) "最小二乘高 "最小二乘非常高 "最大变形 1 "至 "最大变形 6" R/W2 |

|

R/W2 |

|

整数 |

0 至 32 |

找到的对象与模型的最大偏差。以像素为单位。 |

|

R/W2 |

|

整数 |

-1 至 255 |

搜索图像中物体的最小对比度。默认值 = -1 (自动)。限制条件:ScaleMax ≥ ScaleMin |

|

R/W2 |

|

双倍 |

>0.0 至 ≤2.55 |

模型发生的最小缩放。 默认值:0.9 |

|

R/W2 |

|

双倍 |

>0.0 至 ≤2.55 |

模型的最大缩放比例。 默认值:1.1 |

|

R/W2 |

|

字符串 |

右侧指定的字符串 |

用于匹配的度量类型。 "使用极性 "忽略全球极性 "忽略本地极性 |

|

R/W2 |

|

|

-1 0 至 26224.0 |

PositionX 坐标输出所指向的模型参考位置(从 "执行 "开始) -1:使用模型的几何中心(由 VF 确定) |

|

R/W |

|

|

-1 0 至 26224.0 |

PositionY 的输出坐标所指向的模型参考位置(从执行开始) -1:使用模型的几何中心(由 VF 确定) |

|

R/W |

|

1 |

配置参数在运行时保持不变,此时只能 "读取"。 |

|---|---|

2 |

本机支持 HALCON 标量类型。这些数据类型是 •双(IEEE 64 位浮点数) •整数(Hlong) •字符串 |

常量

最大结果数

运行时不可修改的变量。仅在 AS 软件上下文中需要使用。保留内存块的最大数量。永久定义匹配结果的最大数量。

ShapeSearchGreediness

参数 ShapeSearchGreediness 决定搜索的 "贪婪 "程度。如果 ShapeSearchGreediness = 0,就会使用安全搜索启发式,只要其他参数设置得当,只要模型位于图像中,就一定能找到它。不过,这种搜索方式相对耗时。ShapeSearchGreediness = 1,使用非安全搜索启发式,在极少数情况下,即使模型在图像中可见,也可能找不到模型。ShapeSearchGreediness = 1 可以最大限度地提高搜索速度。在大多数情况下,如果 ShapeSearchGreediness = 0.9,就能安全地找到形状模型。

形状搜索边界形状模型

定义用于查找形状模型 ModelNumber 的类型(如 ShapeModel),是否允许搜索到的模型位于图像之外(即允许超出图像边界)。

ShapeSearchBorderShapeModels 的值可以是 "真"、"假 "或 "系统"。如果设置为默认值 "System",则会使用为 ShapeSearchBorderShapeModels 设置的全系统值(FALSE)。

匹配类型

在选择类型时,除了基本属性外,还必须注意模型的数据大小差异很大。

•通过形状模型或可变形形状模型导入的单个模型需要几千字节的 RAM。

•而通过 NCC 模型导入的模型则需要几兆内存。

信息:

在创建应用程序时,必须根据摄像机和 CPU 的可用 RAM 来考虑模型的总内存需求!尤其是 NCC 模型的选择不能太大。

例如,一组 10-15 个 NCC 模型可能很快就需要超过 100 MB 的内存。然而,作为形状模型创建的相同模型只需要几兆字节。

信息:

与模型相比,NCC 模型的绘制 ROI 应该非常窄,否则搜索也将需要大量内存。

例如,当使用 130 万像素传感器执行全面搜索(ModelAngle 从 0 到 360)时,如果 ROI 面积大于图像大小的 1/4,几分钟后搜索就会因内存不足而中止。

值 |

信息 |

|

|---|---|---|

USINT |

0 |

NCC 模型(基于交叉相关的匹配) 对散焦、形状的细微变化和光照的线性变化不敏感。 对结构化物体效果良好。 但是,对无序、遮挡、非线性光照变化、缩放或多通道图像无效。 教入模型的大小在兆字节范围内。 |

1 |

形状模型(基于形状的匹配) 不受杂波、遮挡、非线性光照变化、缩放、散焦和轻微形状变形的影响。 可处理多通道图像。可同时用于多个模型。 但在处理某些纹理时有困难。 教入模型的大小在千字节范围内。 |

|

|

2 |

可变形形状模型(基于形状的匹配) 标准形状模型无法找到与所学模型有轻微变形的物体,或者只能找到精度较低的物体。在这种情况下可以使用这种模型。相对于基础模型的最大允许变形量由参数 MaxDeformation 设定。参数单位为像素,介于 0(无变形)和 32 之间。例如,如果最多允许 2 个像素的变形,则必须将参数设置为 2。如果允许变形(MaxDeformation > 0),为了获得有意义的分数,建议使用最小二乘参数 SearchSubpixelMode。 教入模型的大小在千字节范围内。 |

模型级数

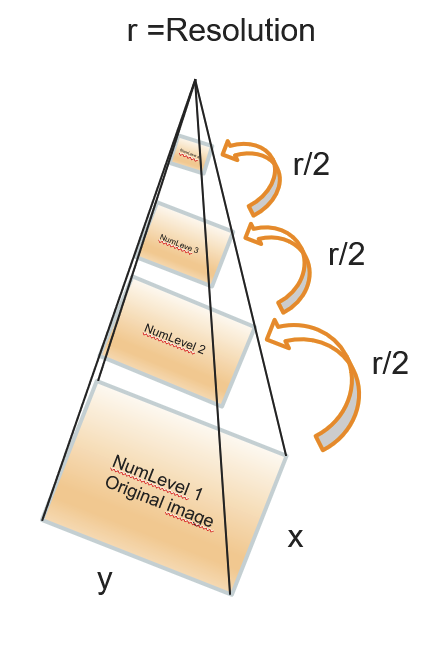

ModelNumLevel 决定了教入模型和所用图像各保留一半分辨率的频率(关键字:金字塔级别)。ModelNumLevel = 1 表示 mapp Vision HMI 应用程序中显示的原始图像。每增加一级,分辨率就降低一半。如果 ModelNumLevel = 3,则分辨率为原始图像的四分之一。

该值应尽可能大,因为这将大大加快查找模型的过程。不过,在选择 ModelNumLevels 时,重要的是要确保模型在金字塔最高层仍可识别,并且不会丢失任何结构。如果为 ModelNumLevels 指定的值为 0,函数将自动选择金字塔的层数。

信息:

ModelNumLevel 越高,分辨率越低。这使得函数运行速度更快,但在某些情况下,分辨率的降低会使模型发生很大变化,以至于不再提供匹配功能。

在极少数情况下,使用 ModelNumLevel 自动计算金字塔层数时,可能会出现金字塔层数过高或过低的情况。如果金字塔级数过高,图像中可能无法识别模型,或者必须选择非常低的 MinScore 或 ShapeSearchGreediness 参数进行搜索才能找到模型。如果金字塔步数设置过低,可能会导致搜索过程中运行时间增加。在这种情况下,应重新选择金字塔步数。另请参阅匹配 - 匹配等级值(分数)的影响因素。

模型角度起始值

参数 ModelAngleStart 为图像中模型的可能旋转设定了角度范围。

模型角度范围

参数 ModelAngleExtent 定义了图像中模型可能旋转的起始角度后的角度范围。

因此在执行搜索时,只能在此角度范围内找到模型。

模型角度步长

参数 ModelAngleStep 指定所选范围内角度间的步长。因此,ModelAngleStep 参数指定了可达到的角度精度。该值应根据物体的大小来选择。较小的模型在图像中只有少量不同的离散旋转。因此,对于较小的模型,ModelAngleStep 应该更大。如果 ModelAngleExtent 不是 ModelAngleStep 的整数倍,ModelAngleStep 会相应调整。为确保搜索不旋转的模型实例时返回的角度正好为 0.0,可能旋转的角度范围调整如下:如果没有一个正整数值 n 能使 ModelAngleStart + n x ModelAngleStep 恰好返回 0,则 ModelAngleStart 最多减少 ModelAngleStep,ModelAngleExtent 增加 ModelAngleStep。

模型度量

参数 ModelMetric 指定在什么条件下仍能识别图像中的图案(即用于匹配的 "度量 "类型)。

值 |

信息 |

|

|---|---|---|

字符串 |

使用极性 (默认值) |

图像中的对象必须具有与模型相同的对比度属性。例如,如果模型是深色背景上的明亮物体,那么只有当物体比背景亮时,才能在图像中找到它。 |

忽略全球极性 |

即使对比度全局相反,也能在图像中找到物体。在上例中,即使物体比背景暗,也会被找到。在这种情况下,搜索的执行时间会略有增加。 |

|

忽略局部极性 |

在这种情况下,即使对比度发生局部变化,也能找到模型。例如,如果对象由平均灰度值的一部分组成,而在该平均灰度值上可能存在深色或浅色的子对象,这种模式就非常有用。不过,由于在这种情况下搜索的运行时间会大大增加,因此在这种情况下,通常更合理的做法是教导多个模型,然后对每个模型进行搜索。 |

模型刻度最小值

模型标度最大值

模型刻度步

如果图像中出现不同大小的模型,则可以使用缩放参数 ModelScaleMin、ModelScaleMax 和 ModelScaleStep 以及 SearchScaleMin 和 SearchScaleMax 来执行示教过程和搜索。

缩放是各向同性的,即在所有方向上都一样。

对于示教过程,需要注意的是,为 ModelScaleMin、ModelScaleMax 和 ModelScaleStep 确定的缩放比例越大,示教过程中生成的数据就会越多。因此,示教过程会减慢。这里确定的值是通过 SearchScaleMin 和 SearchScaleMax 搜索时必须遵守的阈值。因此,搜索所需的阈值应在示教过程中就已知晓。

模型优化

对于特别大的模型,也可以通过设置参数 ModelOptimization 将模型点数设置为 "无 "以外的值。如果模型优化 ="无",则会保存所有模型点。否则,将根据参数 ModelOptimization 减少模型点数。如果点数减少,可能需要在执行搜索时将参数 ShapeSearchGreediness 设为较低值(例如 0.7 或 0.8)。对于较小的模型,减少点数通常不会导致任何加速。如果为模型优化指定了 "auto"(自动),则在模型教学过程中会自动减少点数。

模型对比度最小值

模型对比度最大值

模型最小尺寸

如果图像中的模型应具有一定的对比度,则可以使用对比度参数 ModelContrastMin、ModelContrastMax 和 ModelMinSize 以及 SearchMinContrast 来实现示教过程和搜索。对比度参数尤其适用于检测基于形状的模型的边缘。

这些参数用于定义模型各个像素必须具有的灰度值对比度。对比度是物体和背景之间以及物体不同部分之间局部灰度值差异的度量。对比度的选择应确保所搜索图案的重要特征(即所有需要的边缘)都能用于模型。

也可以通过将模型对比度最小值(ModelContrastMin)、模型对比度最大值(ModelContrastMax)和模型最小尺寸(ModelMinSize)设为-1 来自动确定对比度。这 3 个参数将在随后生成模型时自动写入。

在某些情况下,自动确定对比度阈值可能达不到预期效果。例如,如果某些模型组件应被排除或整合到模型中,或者如果对象由多个不同的对比度级别组成。在这种情况下,最好手动选择参数。

搜索数量级

搜索层数(SearchNumLevels)定义了搜索中应使用的金字塔层数。在生成过程中,可以根据 ModelNumLevels 所指定的区域来调整层次数。如果 NumLevels 指定为 0,则使用 ModelNumLevels 指定的层数。

在某些情况下,金字塔的层数可能过高,例如在模型的教学过程中通过 ModelNumLevels 自动确定的层数。这样一来,可能具有很高最终得分(高 Score(n))的优秀匹配候选对象就会被排除在最高的金字塔层级之外,从而无法找到(另请参阅 "匹配 - 对匹配等级值(得分)的影响")。

与其现在将 MinScore 设置为一个非常低的值以找到所有匹配,不如在 SearchNumLevels 中使用一个比 ModelNumLevels 略低的值。这通常会在速度和稳健性方面取得更好的结果。

搜索角度起始值

参数 SearchAngleStart 和 SearchAngleExtent 定义了搜索模型的角度范围。如有必要,角度范围将根据示教过程中创建模型时指定的区域进行修剪。特别是,这意味着模型和搜索的角度范围必须重叠。还需要注意的是,在某些情况下,可能会发现旋转角度略微超出指定角度范围的实例。如果指定的角度范围小于创建模型时指定的范围,就会出现这种情况。

搜索角度范围

请参阅SearchAngleStart。

搜索子像素模式

参数 SearchSubPixelMode 用于指定是否以亚像素精度进行提取。

如果 SearchSubPixelMode 设置为 "None(无)",则模型的位置将只确定到像素,或以模型教入时指定的角度分辨率确定。

如果将 SearchSubPixelMode 设置为 "插值",则位置和旋转都将以亚像素精度确定。在这种情况下,将使用函数 Score 对模型的位置进行插值。这种模式几乎不需要计算时间,在大多数应用中都能提供足够的精度。

在某些应用中,尽可能高的精度非常重要。在这种情况下,模型的位置可以通过调整计算来确定,即通过最小化模型点与相应像素之间的距离("最小二乘调整")来确定。与 "插值法 "相比,这种模式需要额外的计算时间。搜索最小距离的精度可通过不同模式("最小二乘"、"最小二乘高 "和 "最小二乘非常高")来确定。不过,选择的精度越高,提取子像素所需的时间就越长。

信息:

搜索子像素模式通常应选择 "插值"。如果需要均衡化,则应选择 "最小二乘",因为这样可以在运行时间和精确度之间取得最佳平衡。

在某些情况下,与模型相比在图像中出现轻微变形的对象要么无法找到,要么只能以较 低的精度找到。对于此类对象,可以在参数 SearchSubPixelMode 中以像素为单位额外指定允许的最大对象变形量。

搜索最大变形

与模型相比,图像中出现轻微变形的物体可通过此参数进行补偿。相对于底层模型的最大允许变形量由 SearchMaxDeformation 设置。

单位为像素,介于 0(无变形)和 32 之间。例如,如果允许的最大变形量为 2 像素,则必须将该参数设置为 2。

除 SearchMaxDeformation 外,参数 SearchSubPixelMode 也可用于指定变形。

信息:

使用该参数会增加运行时间。

搜索最小对比度

SearchMinContrast 定义了检测到模型时,模型在图像中必须具有的最小灰度值对比度。换句话说,该参数代表了图像中图案和噪点之间的界限。因此,由图像中的噪点引起的灰度值变化范围就是一个很好的选择。例如,如果灰度值因噪点而在 10 个灰度值等级的范围内波动,则应将 SearchMinContrast 设置为 10。SearchMinContrast 必须小于设置的对比度(请参阅 ModelMinSize)。如果以后要在对比度很低的图像中识别模型,SearchMinContrast 必须设置为相应的低值。如果要检测到有明显遮挡的模型,SearchMinContrast 的选择应略大于噪声造成的灰度值范围,以确保对遮挡模型进行稳健而准确的位置估计。

如果将 SearchMinContrast 设置为 "自动",则会根据模型图像中的噪点自动选择最小对比度。因此,只有在检测过程中预期的图像噪点与模型图像中的噪点一致时,自动确定才有意义。在某些情况下,增加自动确定的值可能也会有用,以获得更强的抗遮挡能力。

信息:

如果教入了多个不同的形状模型,则创建时应使用相同的 SearchMinContrast 值。如果不是这种情况,则在执行(执行)时将使用教入模型中的最低值。

搜索最小对比度

对于示教过程,需要注意的是,为 ModelScaleMin、ModelScaleMax 和 ModelScaleStep 确定的缩放比例越大,示教过程中生成的数据就会越多。因此,示教过程会减慢。这里确定的值是通过 SearchScaleMin 和 SearchScaleMax 搜索时必须遵守的阈值。因此,在示教过程中就应该知道搜索所需的阈值。

搜索标度最大值

对于示教过程,需要注意的是,为 ModelScaleMin、ModelScaleMax 和 ModelScaleStep 确定的缩放比例越大,示教过程中生成的数据就会越多。因此,示教过程会减慢。这里确定的值是通过 SearchScaleMin 和 SearchScaleMax 搜索时必须遵守的阈值。因此,在示教过程中就应该知道搜索所需的阈值。

模型度量模式

值 |

信息 |

|

|---|---|---|

字符串 |

使用对比度 |

图像中的对象必须具有与模型相同的对比度属性。例如,如果模型是深色背景上的明亮物体,那么只有当物体比背景亮时,才能在图像中找到它。 |

忽略全球极性 |

即使对比度全局相反,也能在图像中找到物体。在上例中,即使物体比背景暗,也会被找到。在这种情况下,搜索的执行时间会略有增加。 |

|

忽略局部极性 |

在这种情况下,即使对比度发生局部变化,也能找到模型。例如,如果对象由平均灰度值的一部分组成,而在该平均灰度值上可能存在深色或浅色的子对象,这种模式就非常有用。不过,由于在这种情况下搜索的运行时间会大大增加,因此在这种情况下,通常更合理的做法是教入多个模型,然后对每个模型进行搜索。 |

参考位置 X

PositionX 的输出坐标所指向的模型的参考位置(来自 Execute)。

参考位置 Y

位置 X 的输出坐标所指向的模型的参考位置(来自 Execute)。

This section describes the included parameters from the HALCON 22.11 machine vision software library from MVTec.

Information:

These parameters can be changed directly in the mapp Vision HMI application (see Configuring vision functions).

Information:

Individual configuration parameters that determine the length of a POWERLINK frame can only be changed during configuration in Automation Studio (i.e. acyclically). These parameters can therefore only be read at runtime and are identified as constants.

UCHAR |

1 to 255 |

Maximum number of Matching results |

|

R/W1 |

|

Configuration parameters |

|||||

UCHAR |

0 to 100 |

Factor for searching for matches. From 0 (safe but slow) to 1 (fast but matches can be "overlooked") in increments of 0.01. Default value = 0.9 |

|

R/W |

|

UCHAR |

0 to 2 |

Determines whether the searched models are permitted to be partially outside the image. 0 = False 1 = True 2 = System (default value) |

|

R/W |

|

Model parameters |

|||||

UCHAR |

0 to 2 |

0: NCC model 1: Shape model 2: Deformable shape model |

|

R |

|

|

|

|

|

|

|

Integer |

0 to 10 |

Maximum number of pyramid levels. Default value = 0 (auto) |

|

R/W2 |

|

Double |

-360 to 360 |

Smallest occurring rotation of the pattern. Default value = -0.39 |

|

R/W2 |

|

Double |

0 to 360 |

Extension of the angular range. Default value = 0.79 |

|

R/W2 |

|

Double |

>0.0 to ≤11.25 |

Increment of the angles (resolution). Default value = 0 (auto) |

|

R/W2 |

|

String |

Strings as specified on the right |

Type of metric used for matching. "use_polarity" (default value) "ignore_global_polarity" "ignore_local_polarity" |

|

R/W2 |

|

Double |

0 to 2.55 |

Smallest occurring scaling of the model. Default value: 0.9 |

|

R/W2 |

|

Double |

0 to 2.55 |

Largest occurring scaling of the model. Restriction: ScaleMax ≥ ScaleMin Default value: 1.1 |

|

R/W2 |

|

Double |

0 to 0.2 |

0 (auto) 0.001 - 0.200 |

|

R/W2 |

|

String |

Strings as specified on the right |

Type of optimization. "Auto" (default value) "none" "point_reduction_low" "point_reduction_medium" "point_reduction_high" |

|

R/W2 |

|

Double |

-1 to 255 |

Setting options for ModelContrastMin, ModelContrastMax and ModelMinSize "auto" if Min == -1 && Max == -1 && MinSize == -1 [ "auto_contrast_hyst", MinSize] if Min == -1 && Max == -1 && MinSize ≥ 0 [ "auto_minsize", Min, Max] if MinSize == -1 && Min ≥ 0 && Max ≥ 0 && Min < High [ "auto_minsize", Contrast] if MinSize == -1 && Min ≥ 0 && Max < 0 && Min > High : Contrast := Min [ "auto_contrast", MinSize] if Min == -1 && Min > High (ergo Max ≤ -2) && MinSize ≥ 0 [ "auto_contrast", "auto_minsize"] if Max == -2 && Min == -1 && MinSize == -1 [Min, Max, MinSize] otherwise. This means that if Min ≤ Max, the two describe valid hysteresis parameters; "Hysteresis" is thus mentally selected. If Min > Max, these are not valid hysteresis parameters. As a result, "Contrast" is mentally selected, and Min contains the contrast value. Max is always ≤ -1. |

|

R/W |

|

Double |

-2 to 255 |

|

R/W |

||

Double |

-1 to 2^31-1 |

|

R/W |

||

Integer |

0 to 10 |

Maximum number of pyramid levels. Default value = 0 (uses the value from ModulNumLevels) |

|

R/W2 |

|

Double |

-360 to 360 |

Smallest occurring rotation of the pattern. Default value = -0.39 |

|

R/W2 |

|

Double |

0 to 360 |

Extension of the angular range. Default value = 0.79 |

|

R/W2 |

|

String |

For "Shape" |

Subpixel accuracy if not equal to "none". "none" "interpolation" "least_squares" (default value) "least_squares_high" "least_squares_very_high" "max_deformation 1" to "max_deformation 6" |

|

R/W2 |

|

Integer |

0 to 32 |

Maximum deviation of the found object from the model. Specification in pixels. |

|

R/W2 |

|

Integer |

-1 to 255 |

Minimum contrast of the object in the search images. Default value = -1 (auto). Restriction: ScaleMax ≥ ScaleMin |

|

R/W2 |

|

Double |

>0.0 to ≤2.55 |

Smallest occurring scaling of the model. Default value: 0.9 |

|

R/W2 |

|

Double |

>0.0 to ≤2.55 |

Largest occurring scaling of the model. Default value: 1.1 |

|

R/W2 |

|

String |

Strings as specified on the right |

Type of metric used for matching. "use_polarity" "ignore_global_polarity" "ignore_local_polarity" |

|

R/W2 |

|

|

-1 0 to 26224.0 |

Reference position of the model to which the output of PositionX coordinates refer (from "execute") -1: Geometric center of the model is used (determined by the VF) |

|

R/W |

|

|

-1 0 to 26224.0 |

Reference position of the model to which the output coordinates of PositionY refer (from execute) -1: Geometric center of the model is used (determined by the VF) |

|

R/W |

|

1 |

The configuration parameter is constant at runtime and in this case only "Read". |

|---|---|

2 |

HALCON scalar types are natively supported. These data types are: •Double (IEEE 64-bit floats) •Integer (Hlong) •String |

Constants

NumResultsMax

Variable that cannot be modified at runtime. Only necessary in the Automation Studio context. Reserves the maximum number of memory blocks. Permanently defines the maximum number of Matching results.

ShapeSearchGreediness

Parameter ShapeSearchGreediness determines how "greedy" the search should be. If ShapeSearchGreediness = 0, a secure search heuristic is used that always finds the model if it is located in the image as long as the other parameters are set appropriately. This search is relatively time-consuming, however. ShapeSearchGreediness = 1, a non-secure search heuristic is used that in rare cases may not find the model even though it is visible in the image. ShapeSearchGreediness = 1 maximizes search speed. In the majority of cases, the shape model is securely found if ShapeSearchGreediness = 0.9.

ShapeSearchBorderShapeModels

Defines for the type used to find the shape model ModelNumber (e.g. ShapeModel) whether the searched models are permitted to lie outside the image (i.e. are permitted to exceed the image border).

The value of ShapeSearchBorderShapeModels is either "True", "False" or "System". If the value is set to default value "System", the system-wide value (FALSE) is used that was set for ShapeSearchBorderShapeModels.

Type

Select the type of Matching (how objects are detected).

When selecting the type, it is important to note the widely varying data size of the models in addition to the basic properties.

•A single model that has been taught-in via Shape model or Deformable shape model requires several kilobytes of RAM.

•Models that have been taught-in via NCC model require several megabytes of RAM, however.

Information:

When creating the application, it is essential to take into account the total memory requirements of the models, with regard to the available RAM of the camera and the CPU! NCC models in particular should not be selected too large.

A set of 10-15 NCC models can quickly require over 100 MB, for example. The same models created as Shape model only require a few megabytes, however.

Information:

In comparison to the model, the drawn ROI should be very narrow for NCC models; otherwise, the search will also require a lot of memory.

When executing a full search (ModelAngle from 0 to 360) using a 1.3 MP sensor with an ROI area greater than a 1/4 of the image size, for example, the search can be aborted after a few minutes due to insufficient memory.

Values |

Information |

|

|---|---|---|

USINT |

0 |

NCC model (cross-correlation based Matching) Insensitive to defocus, slight changes in shape and linear changes in illumination. Works well for structured objects. HOWEVER, does not work with disorder, occlusion, nonlinear illumination changes, scaling or multichannel images. Size of a taught-in model is in the megabyte range. |

1 |

Shape model (shape-based Matching) Unaffected by clutter, occlusion, nonlinear illumination changes, scaling, defocusing and slight shape deformations. Works with multi-channel images. Can be used for multiple models simultaneously. BUT it has difficulty with some textures. Size of a taught-in model is in the kilobyte range. |

|

|

2 |

Deformable shape model (shape-based Matching) Objects with a slight deformation to the learned model can either not be found with the standard shape model or only with low accuracy. This type of model can be used in these cases. The maximum permissible deformation with respect to the underlying model is set with parameter MaxDeformation. The specification is in pixels between 0 (no deformation) and 32. If a deformation of up to 2 pixels should be permitted, the parameter must be set to the value 2, for example. To get a meaningful score, it is also recommended to use a least-squares variant for parameter SearchSubpixelMode if deformations are permitted (MaxDeformation > 0). Size of a taught-in model is in the kilobyte range. |

ModelNumLevels

ModelNumLevel determines how often the taught-in model and the used image are each reserved in halved resolution (keyword: pyramid levels). ModelNumLevel = 1 is the original image displayed in the mapp Vision HMI application. With each additional level, the resolution is reduced by half. This means a quarter of the resolution of the original image if ModelNumLevel = 3.

The value should be as large as possible, as this will greatly speed up the process of finding the model. When choosing ModelNumLevels, however, it is important to ensure that the model is still recognizable at the highest pyramid level and that no structures are lost. If value 0 is specified for ModelNumLevels, the function selects the number of pyramid levels automatically.

Information:

The higher the ModelNumLevel, the lower the resolution. This makes the function faster, but in some cases the reduced resolution changes the model so much that Matching is no longer provided.

In rare cases, it is possible that the number of pyramid levels was determined too high or too low when using ModelNumLevel to automatically calculate the number of pyramid levels. If the number of pyramid levels is too high, the model may not be recognized in the image or very low MinScore or ShapeSearchGreediness parameters may have to be selected for the search to find the model. If the number of pyramid steps is set too low, increased runtimes may result during searching. In these cases, the number of pyramid steps should be re-selected. See also Matching - Influences on the grade value (Score) of Matching.

ModelAngleStart

Parameter ModelAngleStart sets the start angle for a defined range of angles for the possible rotations of the model in the image.

ModelAngleExtent

Parameter ModelAngleExtent defines the range of angles after a defined start angle for the possible rotations of the model in the image.

When executing the search, the model can therefore be found only in this angular range.

ModelAngleStep

Parameter ModelAngleStep specifies the step size between angles in the selected range. ModelAngleStep therefore specifies the achievable angular accuracy. The value should be chosen based on the size of the object. Smaller models only have a small number of different discrete rotations in the image. ModelAngleStep should therefore be larger for smaller models. If ModelAngleExtent is not an integer multiple of ModelAngleStep, ModelAngleStep is adjusted accordingly. To ensure that the search for model instances without rotation returns angles of exactly 0.0, the angle range of possible rotations is adjusted as follows: If no positive integer value n exists for which ModelAngleStart + n x ModelAngleStep returns exactly zero, ModelAngleStart is decreased by at most ModelAngleStep, and ModelAngleExtent is increased by ModelAngleStep.

ModelMetric

Parameter ModelMetric specifies the conditions under which the pattern in the image is still recognized (i.e. the type of "metrics" used for matching).

Values |

Information |

|

|---|---|---|

String |

use_polarity (default value) |

The object in the image must have the same contrast properties as the model. If the model is a bright object on a dark background, for example, the object will only be found in the image if it is brighter than the background. |

ignore_global_polarity |

The object is found in the image even if the contrast is globally reversed. In the example above, the object would be found even if it is darker than the background. The execution time of the search increases slightly in this case. |

|

ignore_local_polarity |

The model is found with this even if the contrast ratios change locally. This mode can be useful, for example, if the object consists of a part of an average grayscale value on which either dark or light subobjects can lie. Since the runtime of the search increases considerably in this case, however, it usually makes more sense in such cases to teach-in several models and search for each one. |

ModelScaleMin

ModelScaleMax

ModelScaleStep

If a model occurs in different sizes in the image, the teach-in process and searching for it can be implemented with scaling parameters ModelScaleMin, ModelScaleMax and ModelScaleStep as well as SearchScaleMin and SearchScaleMax.

Scaling is isotropic, i.e. the same in all directions.

For the teach-in process, it is important to note that the larger the scaling determined for ModelScaleMin, ModelScaleMax and ModelScaleStep, the more the data generated during the teach-in process will increase. The teach-in process slows down as a result. The values that are determined here are threshold values that must be adhered to when searching via SearchScaleMin and SearchScaleMax. Which threshold values are required for the search should therefore already be known during the teach-in process.

ModelOptimization

For particularly large models, it can also be useful to set the number of model points to a value other than "None" by setting parameter ModelOptimization. If ModelOptimization = "None", all model points are saved. Otherwise, the number of points is reduced according to parameter ModelOptimization. If the number of points is reduced, it may be necessary to set parameter ShapeSearchGreediness to a lower value (for example, 0.7 or 0.8) when executing the search. For smaller models, reducing the number of points typically does not cause any acceleration. If "auto" is specified for ModelOptimization , during teach-in of the model the reduction of points is defined automatically.

ModelContrastMin

ModelContrastMax

ModelMinSize

If a model in the image should have a certain contrast, the teach-in process and searching for it can be implemented with contrast parameters ModelContrastMin, ModelContrastMax and ModelMinSize as well as SearchMinContrast. The contrast parameters are particularly suitable for detecting edges for shape-based models.

The parameters are used to define the gray value contrast that the individual pixels of the model must have. Contrast is a measure of the local grayscale value differences between the object and background as well as between different parts of the object. The contrast should be selected so that the significant features of a searched pattern (i.e. all required edges) are used for the model.

It is also possible to determine the contrast automatically by setting ModelContrastMin, ModelContrastMax and ModelMinSize to -1. The 3 parameters are then written to automatically during subsequent generation of the model.

In certain cases, determining the contrast threshold values automatically may not bring about the desired result. If certain model components should be excluded or integrated into the model, for example, or if the object is composed of several different contrast levels. In these cases, selecting parameters manually is preferred.

SearchNumLevels

SearchNumLevels defines the number of pyramid levels that should be used in the search. The number of levels may be trimmed to the area specified with ModelNumLevels during generation. If NumLevels is specified as 0, the number specified with ModelNumLevels is used.

In some cases, the number of pyramid levels, for example determined automatically with ModelNumLevels during the teach-in process for the model, may be too high. Possibly good Matching candidates, which would have had a very high final score (high Score(n)), are then already excluded at the highest pyramid level and thus not found (see also Matching - Influences on the grade value (Score) of Matching.

Instead of now setting MinScore to a very low value to find all matches, a slightly lower value than in ModelNumLevels can be used for SearchNumLevels. This often achieves better results in terms of speed and robustness.

SearchAngleStart

Parameters SearchAngleStart and SearchAngleExtent define the angle range in which the model is searched for. If necessary, the angle range is trimmed to the area that was specified when the model was created during the teach-in process. In particular, this means that the angular ranges of the model and the search must overlap. It is also important to note that in some cases, instances may be found whose rotation is slightly outside the range of the specified angles. This can occur if the range of specified angles is smaller than the range specified when the model was created.

SearchAngleExtent

See SearchAngleStart.

SearchSubPixelMode

Parameter SearchSubPixelMode specifies whether extraction should be performed with subpixel accuracy.

If SearchSubPixelMode is set to "None", the position of the model will only be determined down to the pixel or with the angular resolution specified when the model is taught-in.

If SearchSubPixelMode is set to "Interpolation", both the position and the rotation are determined with subpixel accuracy. The position of the model is interpolated using function Score in this case. This mode requires almost no computing time and provides sufficient accuracy in most applications.

In some applications, the highest possible accuracy is important. In these cases, the position of the model can be determined by adjustment calculation, i.e. by minimizing the distances between the model points and the corresponding pixels ("least-squares adjustment"). In contrast to "interpolation", this mode requires additional computing time. The accuracy with which the minimum distance is searched can be defined with the different modes ("least_squares", "least_squares_high" and "least_squares_very_high"). The higher the accuracy selected, however, the longer the subpixel extraction takes.

Information:

"Interpolation" should normally be selected for SearchSubPixelMode. If equalization is desired, "least_squares" should be selected since this provides the optimal trade-off between runtime and accuracy.

In some cases, objects that appear slightly deformed in the image compared to the model can either not be found or only found with low accuracy. For such objects, it is possible to additionally specify the maximum permissible object deformation in pixels in parameter SearchSubPixelMode.

SearchMaxDeformation

This parameter applies only to the selected deformable shape model type.

Objects that appear slightly deformed in the image compared to the model can be compensated with this parameter. The maximum permissible deformation with respect to the underlying model is set with SearchMaxDeformation.

The specification is in pixels between 0 (no deformation) and 32. If a maximum deformation of up to 2 pixels should be permitted, for example the parameter must be set to value 2.

In addition to SearchMaxDeformation, parameter SearchSubPixelMode can be used to specify the deformation.

Information:

Using this parameter results in an increase of the runtime.

SearchMinContrast

SearchMinContrast defines the minimum grayscale value contrast that the model must have in the image when it is detected. In other words, this parameter represents a boundary between the pattern and noise in the image. A good choice is therefore the range of grayscale value changes caused by the noise in the image. If the grayscale values fluctuate in a range of 10 grayscale value levels due to noise, for example, SearchMinContrast should be set to 10. SearchMinContrast must be less than the set contrast (see ModelMinSize). If the model should be recognized later in very low-contrast images, SearchMinContrast must be set to a correspondingly low value. If a model with significant masking should be detected, SearchMinContrast should be chosen slightly greater than the grayscale value range caused by the noise to ensure robust and accurate position estimation of the masked model.

If SearchMinContrast is set to "Auto", the minimum contrast is automatically selected based on the noise in the model image. An automatic determination therefore only makes sense if the image noise expected during detection corresponds to the noise in the model image. In some cases, it may also be useful to increase the automatically determined value in order to achieve greater robustness against masking.

Information:

If several different shape models were taught-in, they should have been created with the same value of SearchMinContrast. If this is not the case, the lowest value from the taught-in models is used during execution (Execute).

SearchScaleMin

For the teach-in process, it is important to note that the larger the scaling determined for ModelScaleMin, ModelScaleMax and ModelScaleStep, the more the data generated during the teach-in process will increase. The teach-in process slows down as a result. The values that are determined here are threshold values that must be adhered to when searching via SearchScaleMin and SearchScaleMax. Which threshold values are required for the search should therefore already be known during the teach-in process.

SearchScaleMax

For the teach-in process, it is important to note that the larger the scaling determined for ModelScaleMin, ModelScaleMax and ModelScaleStep, the more the data generated during the teach-in process will increase. The teach-in process slows down as a result. The values that are determined here are threshold values that must be adhered to when searching via SearchScaleMin and SearchScaleMax. Which threshold values are required for the search should therefore already be known during the teach-in process.

ModelMetricMode

Type of metric used for matching.

Values |

Information |

|

|---|---|---|

String |

use_polarity |

The object in the image must have the same contrast properties as the model. If the model is a bright object on a dark background, for example, the object will only be found in the image if it is brighter than the background. |

ignore_global_polarity |

The object is found in the image even if the contrast is globally reversed. In the example above, the object would be found even if it is darker than the background. The execution time of the search increases slightly in this case. |

|

ignore_local_polarity |

The model is found with this even if the contrast ratios change locally. This mode can be useful, for example, if the object consists of a part of an average grayscale value on which either dark or light subobjects can lie. Since the runtime of the search increases considerably in this case, however, it usually makes more sense in such cases to teach-in several models and search for each one. |

ReferencePositionX

Reference position of the model to which the output coordinates of PositionX refer (from Execute).

ReferencePositionY

Reference position of the model to which the output coordinates of PositionX refer (from Execute).